Fortune 500 Manufacturer Adopts Flux7 Enterprise DevOps Framework as DevOps Onboarding Model

- December 06, 2018

Profile

As a global organization, this manufacturer of heavy-duty machinery is listed among the Fortune 500, is listed on the New York Stock Exchange and has been a trusted brand for over a century.

Opportunity

The company saw the opportunity to extend IT beyond its headquarters to have a positive impact on every customer by embedding IT solutions into the products it sells. More than just maintenance alerts, the manufacturer’s IT is creating holistic systems that provide customers with important data about how to do their business to maximize productivity and output.

Reaching beyond the bounds of traditional IT, the firm knew it needed to create a platform business model in which it could turn internal consumers into providers with building blocks that facilitate creation. By providing access to these digital platforms, the organization is able to extend the traditional definition of IT to meet the demands of internal and external customers alike and in the process move its systems of innovation including customer experience, analytics, IoT and ecosystem foundations onto the cloud.

Partnership with Flux7

The partnership with Flux7 began in 2015 as this company embarked on a digital transformation that would eventually work its way through the company’s various departments — from enterprise architecture to application development and security — and business units, such as embedded systems and credit services. With the initial project focused on a limited migration to AWS, the outcome has been a complete agile adoption of Flux7’s Enterprise DevOps Framework for greater security, cost efficiencies, and reliability. Enabled by solutions that connect its equipment and customer communities, the digital transformation effectively supports the company’s ultimate goal to create an unrivaled experience for its customers and partners.

Solution

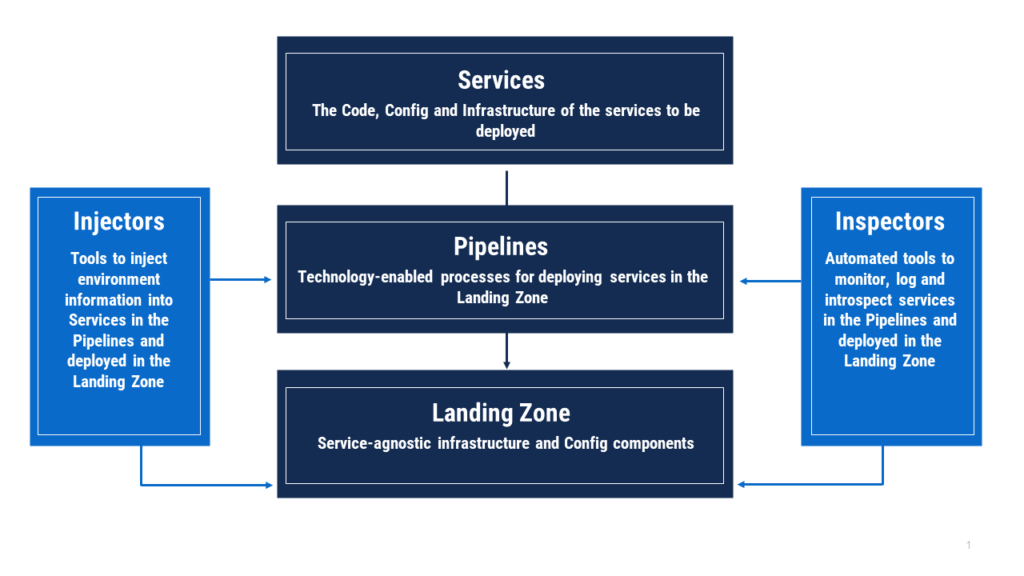

Flux7 and this manufacturer have partnered on many projects representing milestones on a larger path toward the adoption of an Enterprise DevOps Framework (EDF) in which the client organization applies automation to grow its agility, security and most importantly a customer experience that can’t be beaten. As a mechanism for furthering DevOps adoption within the organization, using the EDF, new teams can be onboarded to an AWS DevOps foundation and workflow, quickly adopting best practices in the process. In addition, as new technologies come to market (which they frequently do) the firm is able to use the EDF as a way to easily adopt them and maintain a system that provides a business advantage.

Milestones - Data Lake to support In-Field IoT Applications

In early 2015, we began work on our first joint project, the migration of the company’s MongoDB into an AWS Data Lake in order to support greater scalability demand from applications it was developing to collect and process data from the field. In addition to addressing scalability — with auto-scalability where possible — the data lake was designed to help improve the company’s disaster recovery (DR) and backup strategy. The project also replicated the entire structure in Europe while ensuring the solution complied with EU data privacy laws.

While the company’s burgeoning IoT infrastructure was based in a data center, Flux7 helped enable the rapid setup of AWS IoT infrastructure, creating a Landing Zone and DevOps workflow via Pipelines, that is agile, leverages AWS and Ansible, and maintains tight security controls.

With the infrastructure in place, Flux7 helped migrate Mongo DB and Hadoop from the firm’s data center to AWS. While the organization’s Mongo admin team had not automated the process for setting up a Mongo DB cluster — because it did not happen often enough to warrant it in the prior environment — by setting up infrastructure as code, Flux7 was able to decrease the time for server procurement to mere minutes. To fully enable the Mongo team to take advantage of this level of automation with the infrastructure, Flux7 setup scripts for bootstrapping a Mongo DB cluster. A process which initially took the DB admin days was reduced to 15 minutes.

In addition, Flux7 used this opportunity to add additional features to the company’s internal toolchain and support legacy application modernization by migrating these applications to AWS. Specifically, Flux7’s unique approach to dynamically assigning DNS names to machines in an AWS Autoscaling Group (ASG) enabled legacy application migration onto AWS that required hard-coded DNS names.

Building on the Services, Landing Zone and Pipeline setup done for Hadoop and the organization’s field applications, Flux7 next set out to help the company expand its streaming capacity by 2.5x. With its streaming infrastructure hosted in multiple data centers, Flux7 helped migrate the communication server and supporting applications to AWS. In doing so, it helped make the application and infrastructure horizontally scalable, supporting up to 5,000 streams while maintaining the same instance sizes as on-premise.

As a data and analytic steward for its machinery and customers, this firm is developing sustainable differentiation, disrupting the competition.

Elastic High-Performance Computing (HPC)

In addition to its field applications, the business also conducts scientific simulations for various aspects of designing new machinery. These HPC simulations were hosted in the company’s traditional data center, yet required scalability to meet dynamic demand which required planning and a great deal of capital expense. Moving its HPC simulations to the cloud meant that it could greatly reduce this resource overhead while directly addressing the need for scalable, dynamic demand.

Using CfnCluster, Amazon’s framework that deploys and maintains high-performance computing clusters on AWS, Flux7 was able to migrate the manufacturer’s HPC simulations to the cloud, implementing autoscaling on its compute nodes. With a transparent front-end, the infrastructure was agile without affecting users. Before the transition of its HPC to AWS, compute usage could jump by 50% on a month-to-month basis. However, AWS is now used to support spare capacity on an as-needed, elastic basis, saving compute, financial and planning resources.

Serverless Monitoring and Notification

Furthering its pursuit of DevOps automation, the customer wanted to automate its monitoring and notification system. The goal was to use advanced automation to alert operations and information security teams of any known issues surfacing in the account, e.g., an Amazon VPC which is running out of IP addresses; or violations of the corporate security standard; or all volumes of Amazon RDS databases are encrypted and have a particular tag defined. Additionally, they wanted it to be extensible so that new rules could be conveniently added and the same system could be used to audit multiple AWS accounts.

With dozens of AWS accounts to be continuously audited and monitored, AWS Service Catalog and its cross-account sharing feature were used. Now, when a new account is added, the AWS Service Catalog product is shared with the new account. The product is then deployed in the account, and the new account is added to the list of accounts.

Moreover, the teams created a deployment pipeline using AWS CodePipeline, AWS Lambda, GitHub, AWS CloudFormation and Python Scripts. The data is logged into AWS CloudWatch logs and AWS Lambda is used to trigger and copy the data into the business’s existing in-house ELK cluster where they setup a dashboard to view and use the audit information.

Systems Management

Another goal of this Fortune corporation was to use automation to simplify the maintenance of its instances and improve security and compliance in the process. To do so, AWS Systems Manager (SSM) was used, employing AWS SSM’s encrypted parameter store to handle secrets. As the organization already follows solid AWS IAM practices regarding permissions, this made it easy to provide the necessary separation of AWS KMS keys for encrypted parameters.

Flux7 used AWS SSM Run Command to create an API for handling common administrative tasks and rebuilt the company’s AMI baking process to a serverless setup. Newly created images were scanned for and baked as an encrypted image. Last, automating EDF Inspectors, Flux7 helped the customer meet its compliance needs using AWS Systems Manager Patch Manager, AWS Systems Manager State Manager, and AWS Systems Manager Inventory Manager with compliance data ingested into an ElasticSearch cluster.

Amazon VPC Enables Faster Onboarding

Having proved the distinct benefits to the customer experience — both internal and external — of AWS automation, the firm began further spreading DevOps process automation throughout the organization. One such step was automation of the Amazon VPC process.

Prior to this project, the group had a manual provisioning process that involved many highly repetitive tasks. And while the company had a highly documented process for deploying Amazon VPCs, any manual process opens the door to human error, which in this case meant the process would need to start over from the beginning, consuming unnecessary resources.

As a result, Flux7 helped automate the Amazon VPC provisioning process by creating templates from its documented process with code replacing documentation. As new things were added to the process, they were added to the code, remediating the problem of outdated documentation. Further, this allowed new processes to be flawlessly followed, rather than operators forgetting to include a new step or following an old process out of habit. Last, the automated process allowed the teams to codify institutional knowledge, decreasing issues caused when people leave the organization while simultaneously growing the number of operators who are now able to effectively deploy an Amazon VPC.

Flux7 created a front-end user interface which contains everything in one screen that an operator needs in order to create an Amazon VPC; filling out and submitting the form results in an Amazon VPC provisioned with the required parameters. Everything else happens on the back-end via automation.

Taking Amazon VPC provisioning from many days to a single click freed up important resources, added consistency and decreased the opportunity for human error. Amazon VPC automation has been a transformational change, making the overall team more agile and responsive to business needs while allowing new teams to onboard even faster.

Conclusion

This Fortune manufacturer continues its agile adoption of the EDF, using it as a tool to grow DevOps best practices throughout the enterprise. As teams adopt, learn and master AWS skills and DevOps processes, continuous improvement is applied through measures of Landing Zone maturity, number of high velocities, (revenue-contributing) applications migrated, and frequency of best practice use. As an adoption model, the EDF has helped the company excel at onboarding new teams, spreading the business benefits of constant innovation and greater agility that continue to translate into unparalleled customer experiences.

Subscribe to our blog