The Flux7 Enterprise DevOps Framework

- June 22, 2017

Flux7 DevOps consultants have worked with more than 150 companies over the years as they have gone through the DevOps transformation process. And, we’ve learned a lot along the way, including the patterns that emerge in the DevOps journey and where most people land and/or have the vision to land. We’d like to share that journey with you today and more importantly, how we’d encourage you to think about the DevOps framework that helps gets your organization there.

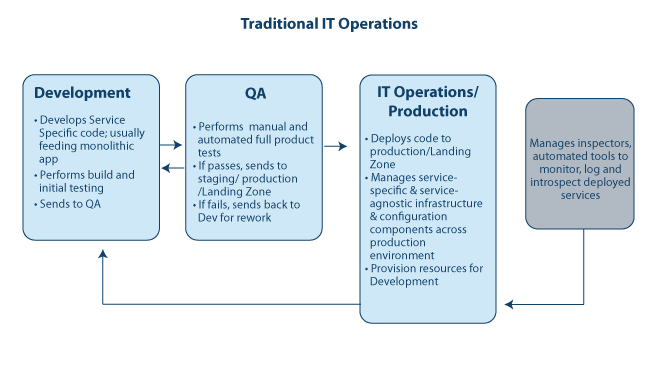

However, before we go forward, we need to take you one step backward, to a view of a more traditional IT framework. From this traditional framework, we can compare and contrast the new DevOps framework.

As you are likely aware, in a more traditional development-IT operations framework, application teams are primarily in charge of their own code that is to be deployed. There is a QA release team that often works in the middle of the application release process. Once they are finished testing code, they hand over the code artifacts to the IT operations team for release to production. In this model, the IT operations teams are in charge of all service agnostic components, such as the data center and networking, as well as service-specific components like getting a mySQL 5.0 database up and running before an app can be deployed. Traditionally, all application dependencies are the responsibility of IT operations.

As you can see from this graphic, inspectors refer to those items that need to be checked and monitored within the environment. In this traditional IT framework, inspectors are primarily related to the production monitoring of system metrics like CPU or memory.

The Flux7 EDF

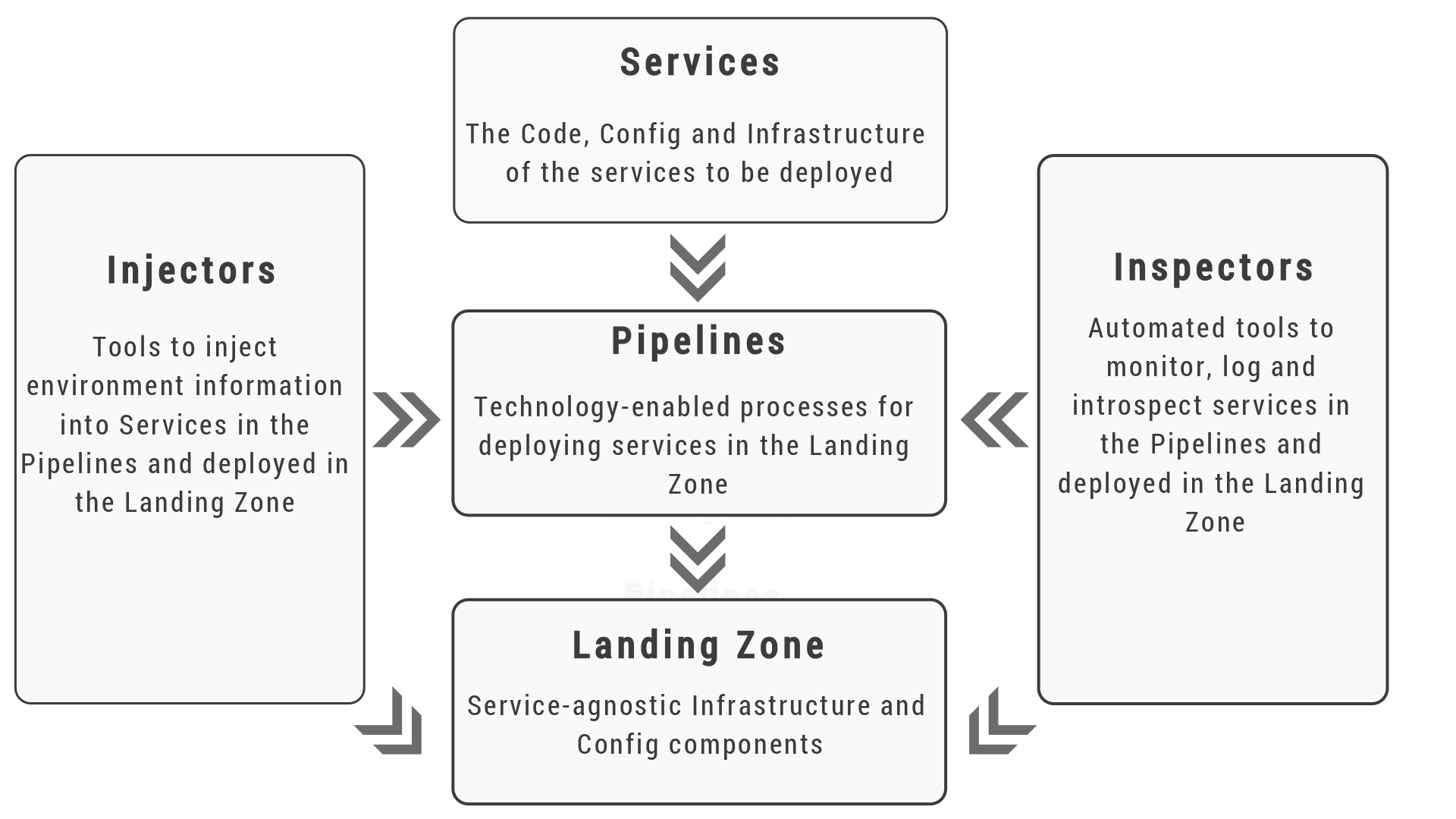

In juxtaposition to this traditional IT model is Flux7’s Enterprise DevOps Framework (EDF):

As I noted above, this framework is based on our extensive experience as a DevOps sherpa to over 150 customers and is focused on helping developers and IT ops execute at full speed with minimal dependencies to achieve specific business goals.

This change in the framework has resulted in a significant change for service teams, with these teams being asked to own more and more. They own not just code, but also things like configuration (via Chef, Puppet or Ansible), infrastructure in the form of tools (like Terraform and AWS CloudFormation) that create virtual machines, and frankly, everything specific to the services they create. In this new DevOps-driven model, the service code and all its relevant dependencies are owned by the service team. This allows the service team to move faster as they have fewer dependencies.

In this new DevOps framework, the traditional IT operations team is converted into a concept that we call the landing zone. The landing zone is where services deploy and as a result is focused on catching service agnostic components as they are delivered via pipelines. (We define pipelines as processes designed specifically to automate the delivery of services into the landing zone.) In the EDF, the concept of a service-agnostic landing zone is very critical as is the idea of service teams owning more of their dependencies.

In this new model, inspectors also play a role, but they now have two jobs. They inspect components:

As they move through the pipeline. This more traditional inspection includes things like security and audit checks.

Once they are running in the landing zone for image analysis on a container to check for available software on your container. This secondary level of inspection gives you a whole new level of security and vulnerability analysis not typically done in virtual machines.

In this new model, we add the idea of injectors. When you have a framework like the EDF with things moving full speed, the last thing you want is for people to have to stop the process in order to communicate with each other information specific to applications landing in the landing zone. For example, imagine having to interrupt automation to pass along a subnet ID.

To this end, most of the companies we work with have adopted automated tools that inject environment-specific information into their service templates on the fly as elements go through the pipeline. This level of automation helps them reach their full potential and protects against fat-finger induced errors. We encourage the use among our customers of HashiCorp Vault and HashiCorp Consul for this purpose, allowing them to have their service and configuration information available for services to pull when needed.

At the end of the day, the goal is for service and operations teams to work at their full potential and at full speed with fewer dependencies. While many organizations are getting there one step at a time, we can certainly learn from the patterns they’ve left in their wake, speeding the subsequent time to become a DevOps empowered organization. Using the EDF as a foundation for their efforts has helped our clients turn DevOps into a puzzle to solve rather than an enigma, helping pave the way to a successful DevOps transformation.

Read our AWS Case Studies, or check out our DevOps resource page for additional tips, tricks and best practices.

Subscribe to our blog