Analyzing AWS m3 Instances for Performance & Bandwidth - Flux7 Blog

- September 03, 2014

Amazon Web Services (AWS) EC2 offers a variety of instance families and types, each serving specific use cases. The instance families include:

- General Purpose: T2, M3

- Computer Optimized: C3

- Memory Optimized: R3

- Storage Optimized: I2

- GPU: G2

In this post, let’s pick the general purpose M3 family. It’s a balance of compute, storage and network services. Let’s analyze it in depth.

M3 is the new generation of the the ‘m’ family, while m1 is the previous generation. So, first things first. Let’s refresh some M3 basics:

Best suited for: Data processing, caching and running backend servers.

Features:

- Processor: Intel Xeon E5-2670 (Sandy Bridge or Ivy Bridge)

- Storage: SSD

Let’s analyze the following with respect to m3:

CPU Performance

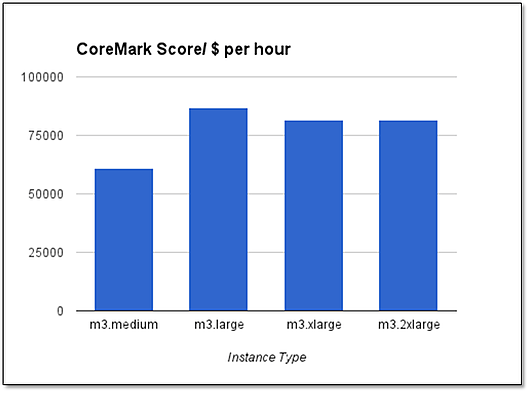

To understand CPU performance of m3 instances, we used CoreMark, an industry standard benchmarking suite. Read the experiment setup here: CoreMark setup.

The average CoreMark score for the m3 instances are tabulated as follows:

|

Instance Type |

CoreMark Score |

Std Dev |

Std Dev as % |

CoreMark /$ per hour |

|---|---|---|---|---|

|

m3.medium |

6879.731 |

30.062 |

0.4369 |

60882.577 |

|

m3.large |

19539.593 |

101.749 |

0.5207 |

86842.639 |

|

m3.xlarge |

36699.549 |

75.971 |

0.2070 |

81554.553 |

|

m3.2xlarge |

73522.869 |

579.416 |

0.788 |

81692.077 |

The following graph shows the CoreMark score for the m3 instances:

It’s pretty clear that the CoreMark score per dollar is off the charts for m3.large. This means that it offers the best performance per dollar.

Disk Bandwidth

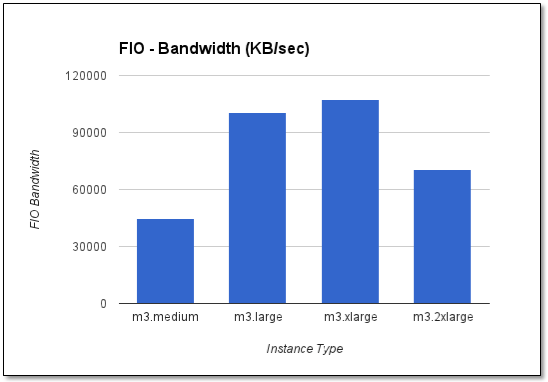

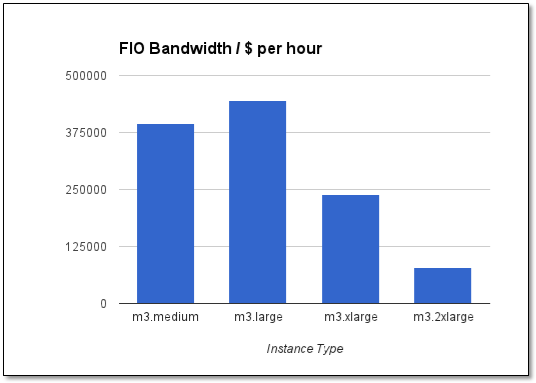

FIO, a tool for benchmarking and stress testing IO, was used to analyze the disk bandwidth of m3 instances. Read the experiment setup here: FIO setup.

Four IO operations were benchmarked using the tool. The operations include:

- Sequential Read

- Sequential Write

- Sequential Read-Write Mix

- Random Read-Write Mix

|

Instance Type |

FIO – Bandwidth |

FIO / $ per hour |

|---|---|---|

|

m3.medium |

44690.956 |

395495.1915 |

|

m3.large |

100306.234 |

445805.4883 |

|

m3.xlarge |

107426.631 |

238725.8486 |

|

m3.2xlarge |

70758.077 |

78620.0860 |

As you can see in the graphs, m3.large offers the best FIO performance per dollar. However, one major con is that m3.large has limited memory in it’s SSD drive. As a result, that may not be suitable for larger applications.

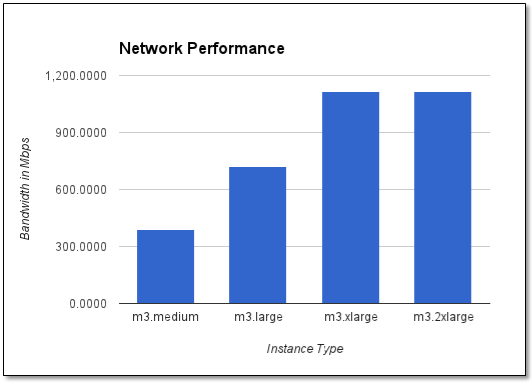

Network Performance

A common concern of any new cloud (AWS) adopter is the network performance. Using Iperf, the industry standard network benchmarking tool, we measured the bandwidths of each of the m3 instance type. Read the experiment setup here: IPerf tool setup.

|

Instance Type |

Bandwidth (Mbps) |

Std Deviation as % |

|---|---|---|

|

m3.medium |

391.0000 |

0.0000 |

|

m3.large |

723.0000 |

0.0583 |

|

m3.xlarge |

1,116.1600 |

0.0000 |

|

m3.2xlarge |

1,116.1600 |

0.0000 |

From the graph, you can note that m3.xlarge and m3.2xlarge perform better. An interesting observation is that the network bandwidth for m3.xlarge and m3.2xlarge has a ceiling and they were restricted to approximately 1.09 Gbps. This is evident because the standard deviation for these instance types is nearly zero.

Did you find this useful?

Interested in getting tips, best practices and commentary delivered regularly? Click the button below to sign up for our blog and set your topic and frequency preferences.

Subscribe to our blog